Data Analysis, AI, Cloud Engineering, App development, UI/UX

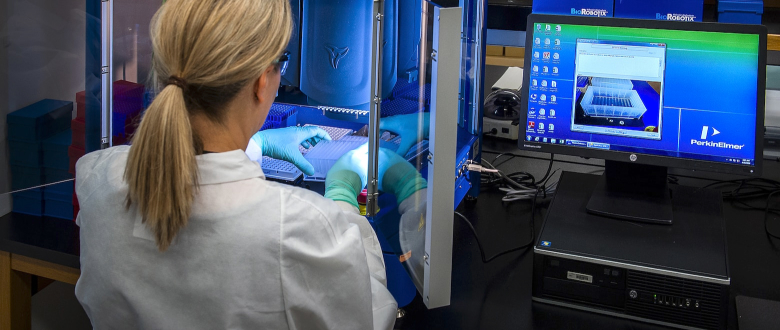

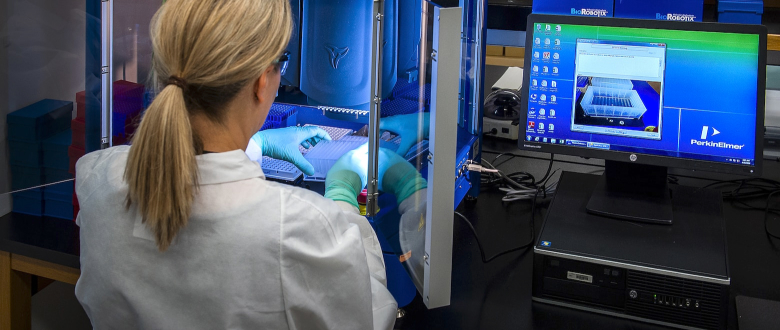

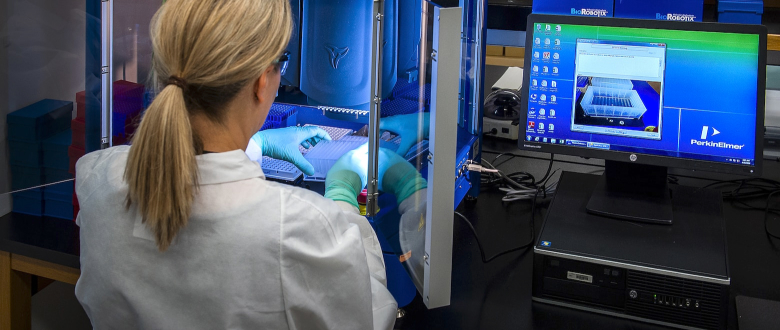

healthcare

We help to develop systems offering health and fitness metrics to users in real-time with various physiological monitoring estimation algorithms. Our solutions provide actionable insights to the end-users by leveraging robust data pipelines and communication modules. We work on all aspects of the product to deliver impactful and positive outcomes.

About healthcareSAAS, NLP, DATA SCIENCE, MICROSERVICES, CI/CD

legal

We provide research support and create models to assess the content of the document and retrieve useful information to help with legal document processing. We develop smooth-sailing data pipelines in the backend to keep all the data secure and process efficient. We created an engaging web app for document lifecycle management that can process thousands of documents together.

About legal

MACHINE LEARNING, CLOUD INFRASTRUCTURE, DASHBOARD

finTech

We help traders ride the bulls even in the bearish markets using elite algorithms to discover, evaluate and improve the best trading strategies in portfolios. We provide an end-to-end solution for our clients, from R&D support to developing a low latency, high performance robust pipeline that delivers actionable insights to stakeholders securely, in time.

About finTech

MACHINE LEARNING, CLOUD INFRASTRUCTURE, DASHBOARD

finTech

We help traders ride the bulls even in the bearish markets using elite algorithms to discover, evaluate and improve the best trading strategies in portfolios. We provide an end-to-end solution for our clients, from R&D support to developing a low latency, high performance robust pipeline that delivers actionable insights to stakeholders securely, in time.

About finTech

Data Analysis, AI, Cloud Engineering, App development, UI/UX

healthcare

We help to develop systems offering health and fitness metrics to users in real-time with various physiological monitoring estimation algorithms. Our solutions provide actionable insights to the end-users by leveraging robust data pipelines and communication modules. We work on all aspects of the product to deliver impactful and positive outcomes.

About healthcare

SAAS, NLP, DATA SCIENCE, MICROSERVICES, CI/CD

legal

We provide research support and create models to assess the content of the document and retrieve useful information to help with legal document processing. We develop smooth-sailing data pipelines in the backend to keep all the data secure and process efficient. We created an engaging web app for document lifecycle management that can process thousands of documents together.

About legal

MACHINE LEARNING, CLOUD INFRASTRUCTURE, DASHBOARD

finTech

We help traders ride the bulls even in the bearish markets using elite algorithms to discover, evaluate and improve the best trading strategies in portfolios. We provide an end-to-end solution for our clients, from R&D support to developing a low latency, high performance robust pipeline that delivers actionable insights to stakeholders securely, in time.

About finTech

Data Analysis, AI, Cloud Engineering, App development, UI/UX

healthcare

We help to develop systems offering health and fitness metrics to users in real-time with various physiological monitoring estimation algorithms. Our solutions provide actionable insights to the end-users by leveraging robust data pipelines and communication modules. We work on all aspects of the product to deliver impactful and positive outcomes.

About healthcare

Small, focused teams work on a project to build impactful tech through collaboration and knowledge sharing.

Understand

We collaborate closely with the stakeholders to understand their requirements. There’s no such thing as too much information!

Plan

Equipped with the understanding, we move forward with the planning phase to come up with a scalable solution.

Execute

With our diverse team of experts, we cater to varied needs and bring the plan into action. We deliver our promises on time!

Collaborate

Ideas grow better when transplanted into another mind. We collaborate with an expert team to bring the best knowledge to your product.

Develop

We bring the idea to life with our skilled team of engineers that will cater to project needs - backend support, AI, or app development. You name it, have it!

Understand

We collaborate closely with the stakeholders to understand their requirements. There’s no such thing as too much information!

Plan

Equipped with the understanding, we move forward with the planning phase to come up with a scalable solution.

Execute

With our diverse team of experts, we cater to varied needs and bring the plan into action. We deliver our promises on time!

Collaborate

Ideas grow better when transplanted into another mind. We collaborate with an expert team to bring the best knowledge to your product.

Develop

We bring the idea to life with our skilled team of engineers that will cater to project needs - backend support, AI, or app development. You name it, have it!

Partnering for Innovation with Google Cloud

Legalsifter has several AI-based automated contract review products. The products are sold to people and partners in 19 countries today.

Apollo Neuro is a wearable technology that relieves stress and helps users relax and improve focus.

Infocusp's Computer Science and Machine Learning talent are helping the teams at X create moonshot technologies and business.

Bryte Labs are makers of AI-based restorative beds, personalizing and optimizing the bed user’s sleep experience.

Agnetix is LED-based horticulture lighting technology. Infocusp is working on software engineering and IoT aspects of the product.

PyrAmes Health is building non-invasive blood pressure wearables and corresponding platforms that can track blood pressure continuously.

Autodesk, Inc. is a multinational software corporation whose technology spans architecture, engineering and construction, water management.

Flourish labs are building technology solutions for users to improve mental health and fulfill their true potential.

The team aspires to make discoveries that impact everyone, & share the research & tools to fuel progress in the tech field.

Vatic Investments is a quantitative trading firm where traders, AI researchers, & technologists collaborate to develop autonomous trading agents & cutting-edge technology.

Peer Collective’s mission is to radically increase access to high-quality mental health support through online peer counseling.

Studio Management is a data-driven asset manager investing across public equities and venture capital.

Dressing families for over 40 years, Brums is an Italian fashion brand for the children of the world.

KiActive helps you build active everyday habits and learn how every move you make matters to feeling healthier, happier, and stronger.

NextSense’s data platform extracts biomarkers of neural state & health - used across applications including neuro-health, disease management, & drug discovery.

SmartMoving offers the best tools, training, & support ready to take moving company businesses to the next level.

dscout is a research tool capturing thoughts, reactions & behaviors in moments in the form rich video, voices, images & text & analyze it within a framework.

Opsis is an app that simplifies nutrition and optimize health through human-centered technology, behavioral and data science, and AI.

Legalsifter has several AI-based automated contract review products. The products are sold to people and partners in 19 countries today.

Apollo Neuro is a wearable technology that relieves stress and helps users relax and improve focus.

Legalsifter has several AI-based automated contract review products. The products are sold to people and partners in 19 countries today.

Apollo Neuro is a wearable technology that relieves stress and helps users relax and improve focus.

Infocusp's Computer Science and Machine Learning talent are helping the teams at X create moonshot technologies and business.

Bryte Labs are makers of AI-based restorative beds, personalizing and optimizing the bed user’s sleep experience.

Agnetix is LED-based horticulture lighting technology. Infocusp is working on software engineering and IoT aspects of the product.

PyrAmes Health is building non-invasive blood pressure wearables and corresponding platforms that can track blood pressure continuously.

Autodesk, Inc. is a multinational software corporation whose technology spans architecture, engineering and construction, water management.

Flourish labs are building technology solutions for users to improve mental health and fulfill their true potential.

The team aspires to make discoveries that impact everyone, & share the research & tools to fuel progress in the tech field.

Vatic Investments is a quantitative trading firm where traders, AI researchers, & technologists collaborate to develop autonomous trading agents & cutting-edge technology.

Peer Collective’s mission is to radically increase access to high-quality mental health support through online peer counseling.

Studio Management is a data-driven asset manager investing across public equities and venture capital.

Dressing families for over 40 years, Brums is an Italian fashion brand for the children of the world.

KiActive helps you build active everyday habits and learn how every move you make matters to feeling healthier, happier, and stronger.

NextSense’s data platform extracts biomarkers of neural state & health - used across applications including neuro-health, disease management, & drug discovery.

SmartMoving offers the best tools, training, & support ready to take moving company businesses to the next level.

dscout is a research tool capturing thoughts, reactions & behaviors in moments in the form rich video, voices, images & text & analyze it within a framework.

Opsis is an app that simplifies nutrition and optimize health through human-centered technology, behavioral and data science, and AI.

Legalsifter has several AI-based automated contract review products. The products are sold to people and partners in 19 countries today.

Apollo Neuro is a wearable technology that relieves stress and helps users relax and improve focus.

Legalsifter has several AI-based automated contract review products. The products are sold to people and partners in 19 countries today.

Apollo Neuro is a wearable technology that relieves stress and helps users relax and improve focus.

Infocusp's Computer Science and Machine Learning talent are helping the teams at X create moonshot technologies and business.

Bryte Labs are makers of AI-based restorative beds, personalizing and optimizing the bed user’s sleep experience.

Agnetix is LED-based horticulture lighting technology. Infocusp is working on software engineering and IoT aspects of the product.

PyrAmes Health is building non-invasive blood pressure wearables and corresponding platforms that can track blood pressure continuously.

Autodesk, Inc. is a multinational software corporation whose technology spans architecture, engineering and construction, water management.

Flourish labs are building technology solutions for users to improve mental health and fulfill their true potential.

The team aspires to make discoveries that impact everyone, & share the research & tools to fuel progress in the tech field.

Vatic Investments is a quantitative trading firm where traders, AI researchers, & technologists collaborate to develop autonomous trading agents & cutting-edge technology.

Peer Collective’s mission is to radically increase access to high-quality mental health support through online peer counseling.

Studio Management is a data-driven asset manager investing across public equities and venture capital.

Dressing families for over 40 years, Brums is an Italian fashion brand for the children of the world.

KiActive helps you build active everyday habits and learn how every move you make matters to feeling healthier, happier, and stronger.

NextSense’s data platform extracts biomarkers of neural state & health - used across applications including neuro-health, disease management, & drug discovery.

SmartMoving offers the best tools, training, & support ready to take moving company businesses to the next level.

dscout is a research tool capturing thoughts, reactions & behaviors in moments in the form rich video, voices, images & text & analyze it within a framework.

Opsis is an app that simplifies nutrition and optimize health through human-centered technology, behavioral and data science, and AI.

Legalsifter has several AI-based automated contract review products. The products are sold to people and partners in 19 countries today.

Apollo Neuro is a wearable technology that relieves stress and helps users relax and improve focus.

Client Stories

We took responsibility and they succeeded!

Alan Cannistraro

CTO, Apollo Neuro

Infocusp has been an integral part of our product development at every level, from firmware to backend, from data to apps. We rely on Infocusp continuously, and turn to them first, for quick turnaround and high-quality engineering.

Lars Mahler

Chief Science Officer & Co-Founder, LegalSifter

Infocusp has been a vital partner to us over the past 5 years. Our InfoCusp team members have led the design and development of our AI pipelines (natural language processing + machine learning), new algorithms, and our cloud infrastructure. In addition, they have conducted R&D activities that have pushed our models to world-class accuracy. As a result, we are able to quickly deliver new AI products, bring them to market, and meet our client's needs. InfoCusp has been a terrific partner, and they have played a key role in LegalSifter’s success.

Xina Quan

Co-founder & CEO, PyrAmes

PyrAmes has been working with Infocusp on wearable healthcare products since 2017, and the partnership continues to grow. The team is actively involved in both the development and implementation of our app and data pipeline and has been a big part of Pyrames' success in analyzing physiological data to date.

Obi Felten

Founder & CEO, Flourish Labs

Infocusp has been a great partner for us on the development of our håp app, a self-tracking app that fosters human connection. We are an early-stage startup and the Infocusp team sometimes had to work with very sparse requirements and documentation. Their can-do attitude and expertise enabled us to iterate quickly as we co-designed the app with our target audience. We have a great working relationship despite timezone challenges collaborating across the US, UK, and India.

Ely Tsern

Co-Founder & CTO, Bryte

Infocusp and their leader, Nisarg, has provided talented engineers that helped us build our platform with quality and at a good value. They have been an impactful, reliable and dedicated partner for us.

Alan Cannistraro

CTO, Apollo Neuro

Infocusp has been an integral part of our product development at every level, from firmware to backend, from data to apps. We rely on Infocusp continuously, and turn to them first, for quick turnaround and high-quality engineering.

Lars Mahler

Chief Science Officer & Co-Founder, LegalSifter

Infocusp has been a vital partner to us over the past 5 years. Our InfoCusp team members have led the design and development of our AI pipelines (natural language processing + machine learning), new algorithms, and our cloud infrastructure. In addition, they have conducted R&D activities that have pushed our models to world-class accuracy. As a result, we are able to quickly deliver new AI products, bring them to market, and meet our client's needs. InfoCusp has been a terrific partner, and they have played a key role in LegalSifter’s success.